The past few years have seen an explosion of interest, usage, and growth in using interactive prototypes to build, evaluate, and iterate on design work. Coupled with the rise in style guides and atomic design, the prototype is now often the primary deliverable many designers create.

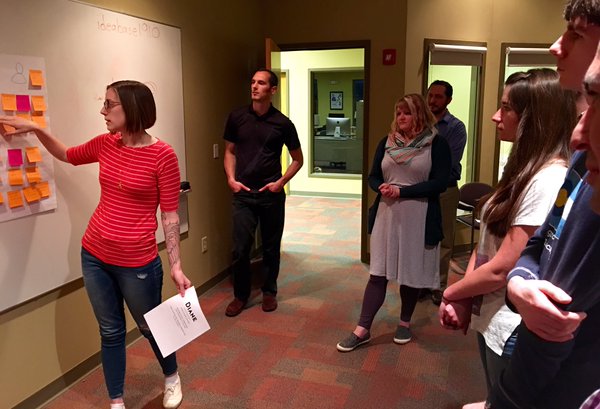

Thankfully, the tools to create prototypes have kept pace; there are myriad choices available, with something new posted with shocking frequency on design blogs. How do you select a tool for your workflow? What are the pros and cons of different types of software? I’ve prepared some notes to help guide you, and focus your search. I was inspired to do this after a great session prompted by UX Akron.

Before getting much further, I’d like to address wireframes. Though the term has fallen out of favor lately, I think that wireframes are still incredibly relevant and useful. The difference between a rough prototype and a clickable wireframe is just terminology. I don’t believe wireframes need to be static, nor do I think prototypes need a certain level of fidelity. The differences seem largely semantic and based on industry zeitgeist. The level of fidelity should be based on the project and your needs, not a pre-determined bias.

There are 3 main categories that modern tools fall into:

- Screen or page focused

- State or layer focused

- Code focused

Some tools are a hybrid of these, but it makes it simpler to divide our choices up and evaluate them that way.

Screen or page focused

In a nutshell, these let you create pages and/or import static images and then easily create hotspots to link to other pages. This can sometimes be automated so that whenever your design files are updated so are your prototype screens.

How much interaction you can add, and the level of animation is somewhat limited, but the upside is that these tools are extremely easy to get up and running with. They’re an ideal choice for a scenario where you need higher fidelity than just boxes and text; if you’re adding features to an existing app for instance. Conversely though, tools like Balsamiq are page based with the goal of keeping things lo-fi, so there are options on both ends of the spectrum. I also include Keynote and PowerPoint, which weren’t designed as prototyping tools but have plenty of functionality to work, and work quickly.

Popular page/screen focused tools are Balsamiq, InVision, Flinto, Marvel, Fluid UI, Keynote, and PowerPoint.

State or layer focused

In contrast are tools focused on changing states, or layers. You can have multiple layers on a single page, dynamic states within a single layer, variables/conditionals for elements, and fairly detailed control for time-based animations and interactions.

With all of this, you’re afforded more control than page-based tools including the ability to link between pages/views in more complex ways. But they tend to be more expensive and complex due to an extensive feature list, bringing a larger learning curve.

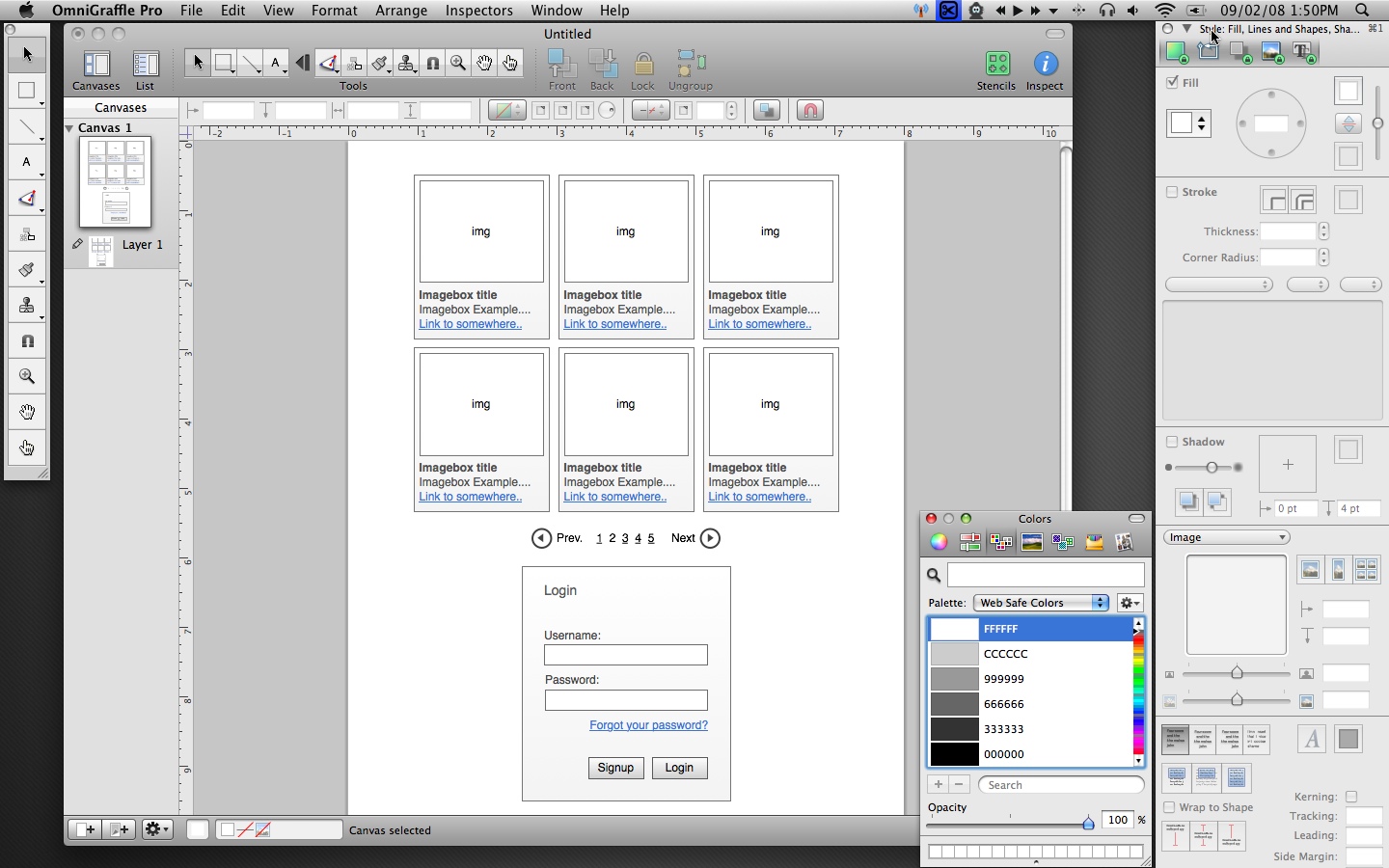

Some tools I lump into this category are Axure, OmniGraffle, Proto.io, Pixate, and Indigo Studio.

Code focused

The last group are basically libraries that help you when creating prototypes programmatically. This gives you essentially complete control over prototypes.

Creating prototypes this way can help communicate your ideas easily to developers, because the developers can look into the code and possibly translate it into production quality code, or at least understand the intention behind it.

The challenges with this are that it’s highly dependent upon your programming skills. It’s also often more difficult to share and present with other members of your team, since there aren’t typically built in web viewers or presentation wrappers.

Quartz Composer with Origami and Framer JS are two examples of tools that fall into this category.

The short answer is obviously that it depends… Each project will have different needs, and you or your team (or clients) will have different requirements too.

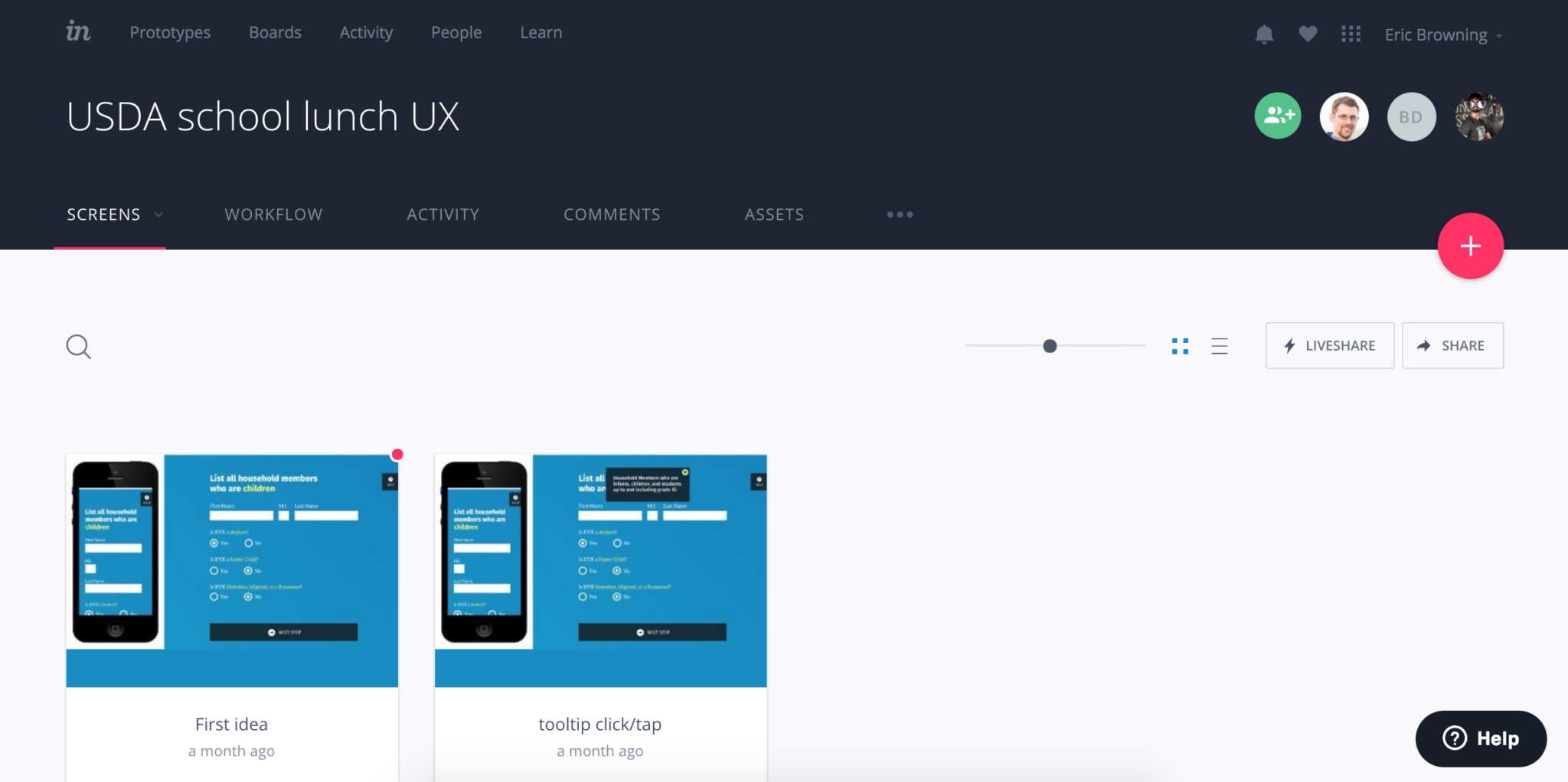

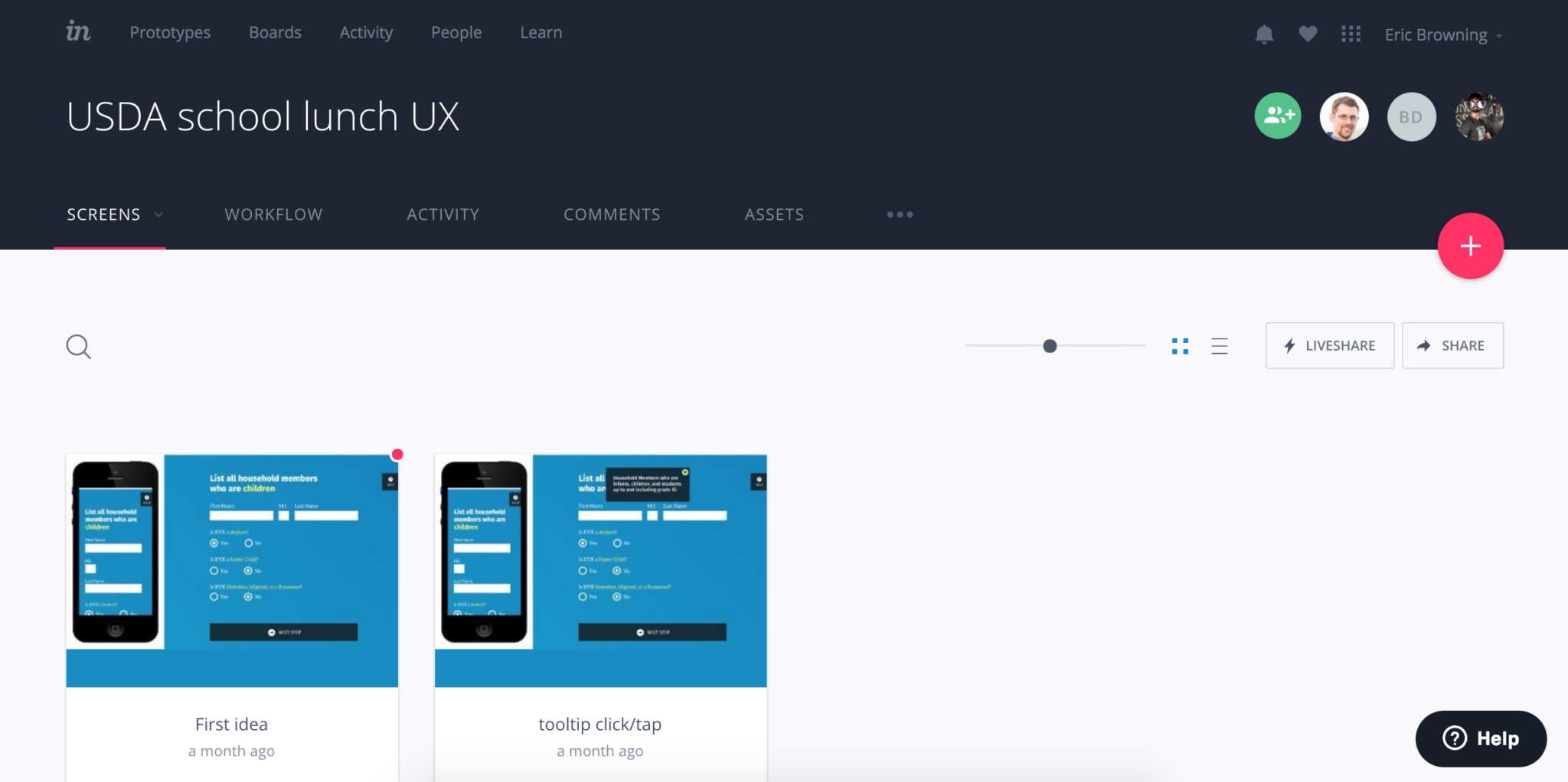

With that being said, I turn to screen based tools first, specifically InVision. My experience is as a designer so having synced files from Sketch or Photoshop is incredibly handy and lets me explore ideas quickly. The prototyping and animation features are limited, but it fits the use cases I’ve had, and having such a low learning curve is wonderful for a working professional.

If there’s a need for a larger prototype I’ve used Axure as well. Visual fidelity wasn’t the goal, but for pure prototyping its organization with complex projects and the ability for team members to collaborate was invaluable.

Don’t worry (too much) about the software

Ultimately, any of the tools here (and all of those that I missed) will allow you to build prototypes. The most important things are the process and your communication; no tools can overcome those. Showing your team a rough prototype early on is much more valuable than spending days getting something perfect, only to realize you’re too late in the process and the project has already moved forward. Explore the options, find something you like, and do great work.